Connecting the Dots: How AI Supports the Clinical Coding Process

A human coder's greatest skill is their ability to connect the dots. They can read a lab result on page 7 of a chart, recall a medication listed on page 3, and synthesize that information to support a specific diagnosis. This process requires deep clinical knowledge and focus, but doing it consistently across thousands of charts is a monumental task. The difficulty of doing this consistently is why incorrectly linking manifestations is such a common issue.

What if you could give your coders an intelligent assistant that performs the initial, time-consuming review instantly, flagging potential connections for their expert validation? That's not a future concept; it's exactly how MedChartScan empowers expert coders today.

Let's move beyond feature lists and look at a real-world example from our platform.

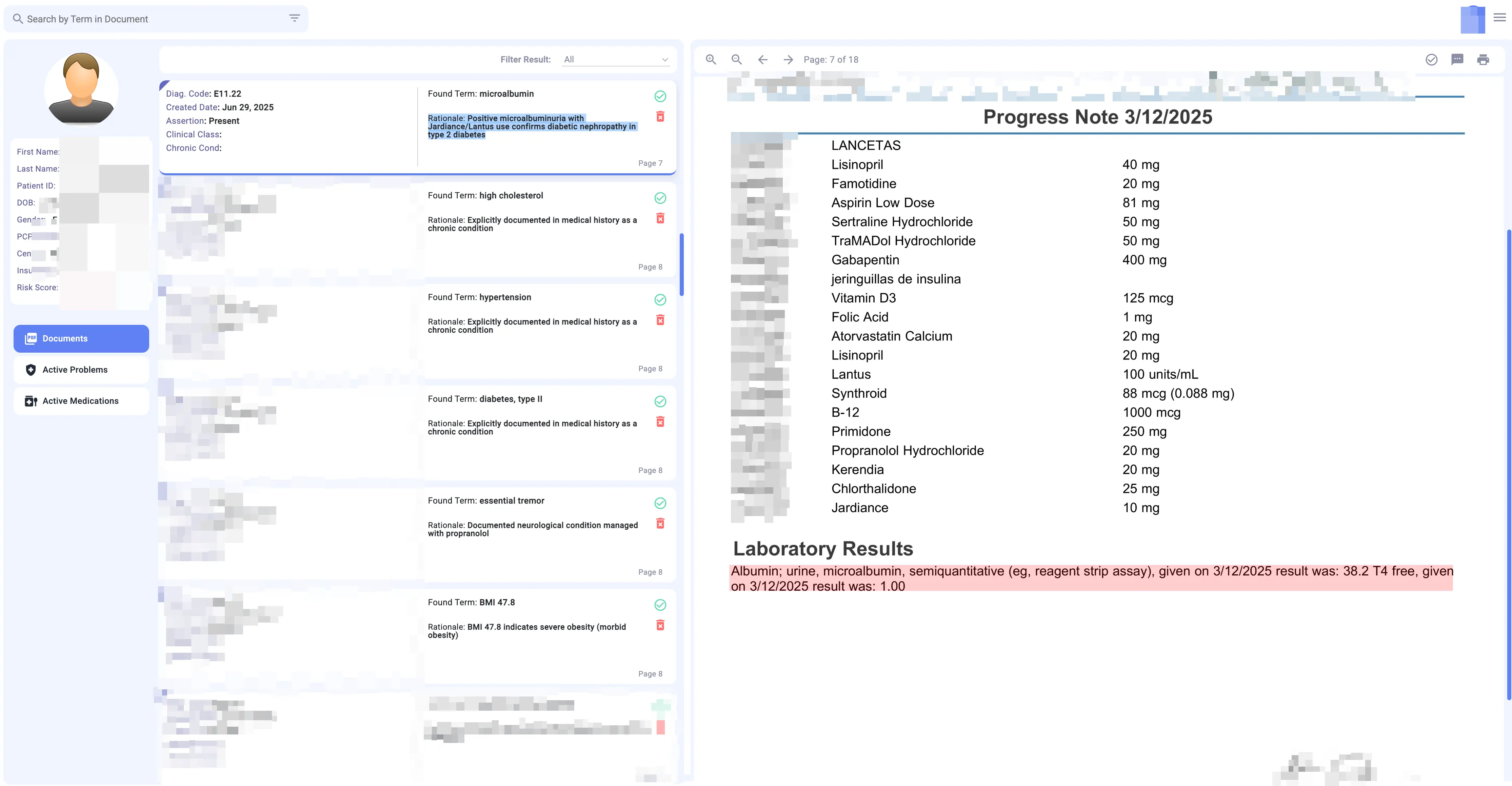

Let's focus on the top suggestion: A potential link to Diag. Code: E11.22 (Type 2 diabetes with diabetic nephropathy).

This is a high-value, specific code that is frequently missed when coders are under pressure. A manual reviewer might just see "diabetes" and code the less specific E11.9. So, how did our AI help the coder arrive at this more accurate conclusion?

It performed a multi-step analysis to surface key evidence for the coder:

-

It Found a Key Lab Value: The AI scanned the entire document and, in the "Laboratory Results" section on page 7, it identified and highlighted the term "microalbuminuria." It flags this as a potential clinical indicator for nephropathy (kidney disease).

-

It Identified Related Medications: The AI also analyzed the patient's medication list, highlighting "Jardiance" and "Lantus," which it recognizes as common treatments for Type 2 diabetes.

-

It Presented a Potential Link: This is the critical step. The AI doesn't make a final decision. It presents a logical suggestion to the human expert, with all the evidence neatly compiled. The rationale presented to the coder is clear:

Suggested Rationale for Review: Positive microalbuminuria with Jardiance/Lantus use may confirm diabetic nephropathy in type 2 diabetes.

*This is the power of collaborative AI. Imagine your team reviewing charts with this level of insight. Schedule a demo to see it live._

The AI surfaced a potential link between a clinical indicator and active treatments, but the final determination is, and always should be, made by the certified coder.

Why This Collaborative Approach Changes Everything

This single example highlights three core benefits of this "AI-powered, human-validated" workflow:

- Enhanced Accuracy: By flagging subtle connections across lengthy documents, the AI helps coders identify the most specific and accurate HCCs, preventing under-coding without sacrificing human oversight.

- Radical Efficiency: The AI handles the exhaustive initial search, allowing your expert coders to bypass hours of manual review and focus directly on high-value validation and complex cases.

- Built-in Audit Protection: The platform provides a clear justification suggestion for the code. When the coder validates it, the system creates a permanent, defensible audit trail linking the final code to the specific, human-approved evidence.

This is the future of risk adjustment. It's not about replacing coders; it's about equipping them with a powerful co-pilot that amplifies their expertise, ensures accuracy, and creates a safer, more compliant workflow. To learn more about the technology that makes this possible, read our deep dive on how AI actually transforms the risk adjustment workflow.